Building a Retrieval Augmented Generation (RAG) AI Chatbot to Improve Customer Service

by Greg Dolder, Principal Architect

Streamlining Customer Service with AI:

Our Experiment with a Global Bicycle Manufacturer

In the never ending challenge to improve customer service, the integration of artificial intelligence (AI) technologies offers an opportunity to enhance efficiency and significantly improve user experiences. Our team at productOps developed a Retrieval-Augmented Generation (RAG) application designed to improve customer service for a prominent global bicycle company.

This is our journey of creating a chat app that could truly provide customers with immediate self service help. The goal of the project was to prove AI via RAG is a viable customer experience option, ultimately reducing customer service tickets by answering questions via chatbot prior to submitting help-desk tickets, and without the maintenance of a standard decision-tree based chatbot.

The Challenge

The global bicycle company we partnered with faced a significant challenge in managing its customer service operations efficiently. With an expanding global customer base, increasing complexity of queries, and realization that "tribal knowledge" is not a viable solution, the company sought to leverage technology to streamline its customer service process and enhance the quality of support provided.

Our Solution:

An AI-Powered Multilingual Customer Service Chatbot

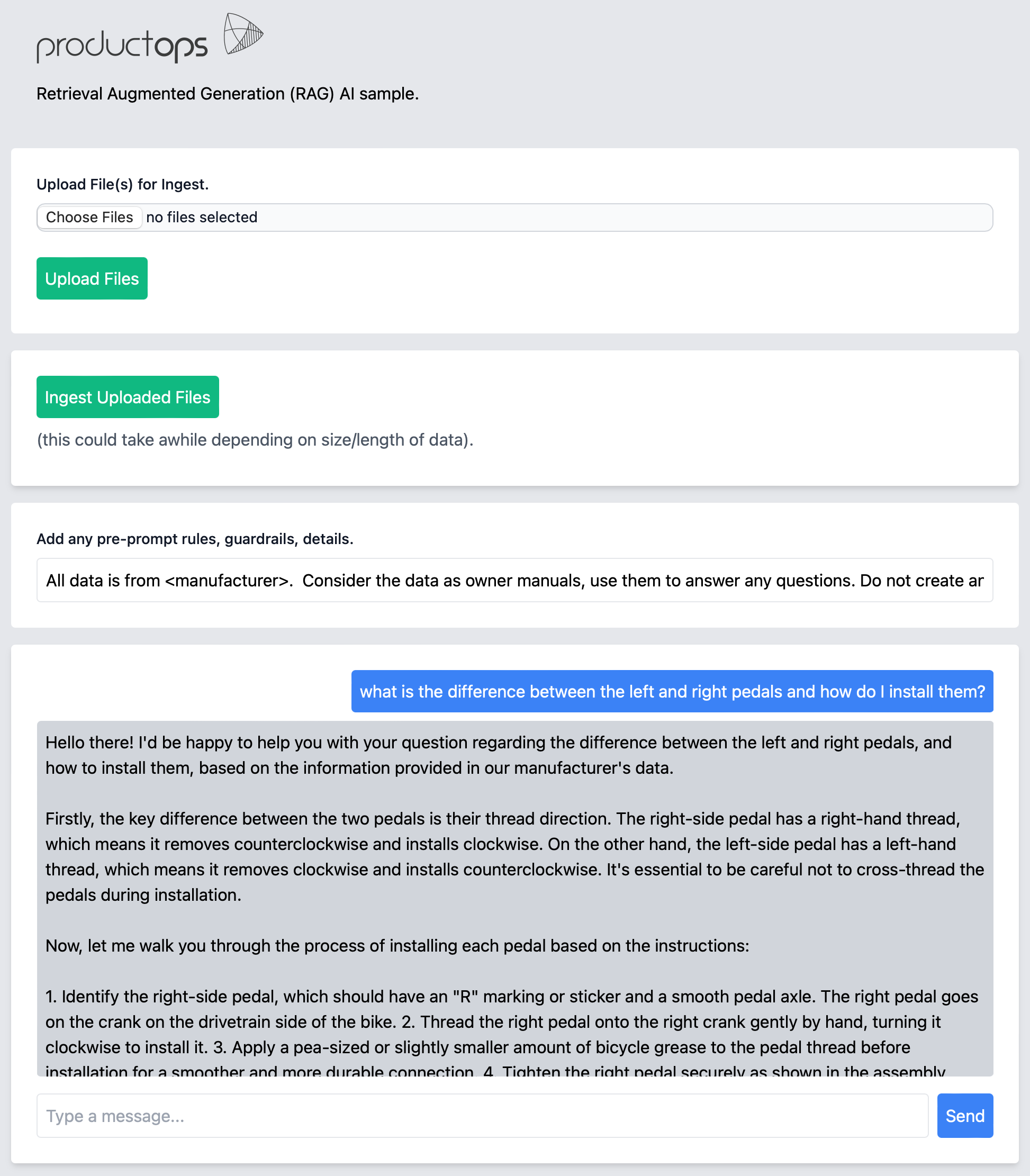

Our proof of concept was to develop a state-of-the-art chatbot application powered by a Retrieval-Augmented Generation application. This AI-driven solution was designed to understand customer queries in multiple languages, retrieve relevant information, and generate concise, accurate and informative responses. The core technologies that powered our solution included the Ollama runtime, Mistral 7B LLM, Hugging Face embeddings, and LangChain, all integrated into a Flask application for a seamless proof of concept.

Building the Foundation with Flask and Mistral 7B LLM

We chose Flask, a lightweight Python web framework, as the foundation for our chat application due to its simplicity and flexibility for the POC, with an intent of moving this to a streamlit chatbot or other technology in the client's stack. This allowed us to quickly set up an internal API that could handle customer queries in real-time. The heart of our application was client ingested manuals and bulletins as well as the Mistral 7B LLM, a cutting-edge language model that we hosted on our own cloud servers. By hosting our own model, we negated expensive API costs that would have been incurred through third-party services like Copilot, Gemini, or OpenAI. This decision not only made our solution more cost-effective but also gave us greater control over the model's performance and customization.

Leveraging Ollama, Huggingface and LangChain

To enhance the application's ability to understand and process customer queries in multiple languages, we incorporated Ollama using the Mistral 7B LLM, Hugging Face and Langchain. The Mistral 7B model was crucial in the ability to support multiple languages (English, French, Italian, German, and Spanish for our tests). LangChain, an open-source library designed to facilitate the development of applications with language models, played a pivotal role in integrating the different components of our solution. It allowed us to seamlessly connect the Mistral LLM with Hugging Face and implement the retrieval-augmented generation mechanism effectively. Spending additional time on tweaking pre-prompt details, model temperature and indexing chunks rounded out the full POC experience and assisted in making sure responses were accurate.

Multilingual Support and Data Ingestion

One of the definitions for success of the project was the ability to answer questions in the brand's supported languages. We ingested a large selection of bicycle owner manuals, technical guides and other data into the Chroma Vector DB in English. The power of the stack allowed us to support interactions in English, French, Italian, German, and Spanish while only ingesting English data. Multilingual support was a necessity for a global organization. We noticed ingesting source data in the native language improves results. We did this with several specific documents which were available in French. Providing pre-prompts to require the response from the model in a selected language helped bridge the gap when only English source data was available.

Hosting Our Model: A Strategic Move

One of the critical decisions in our project was to host the LLM in our private cloud. Due to the volume of data and limited resources for the experiment, repeatedly ingesting large amounts of data via OpenAI, CoPilot or Gemini would have exceeded our budget, and cost prohibitive in the long run. Given this, we wanted to most closely resemble a production environment and therefore self hosted our ingest and run-time environment.

Impact and Results

The implementation of our RAG-based AI chatbot is projected to reduce help-desk tickets by approximately 30%. This is attributed to the application's ability to provide instant, accurate responses to customer queries, allowing many issues to be resolved without human intervention. The client's current chatbot results in opening a ticket whenever the default decision tree based chatbot could not find a satisfactory answer for the user. The application's multilingual support further ensures that customers around the globe can benefit from this AI-driven solution, leading to increased customer satisfaction and loyalty.

Conclusion

The journey of creating a RAG-based chat application for a global bicycle company was definitely insightful and rewarding. We were able to develop a solution that not only meets the current needs of customer service operations but also sets a foundation for future innovation. Hosting our model and leveraging cutting-edge AI technologies allowed us to create a cost-effective, efficient, and multilingual customer service solution. This project exemplifies our commitment to leveraging technology to solve real-world problems, demonstrating the transformative potential of AI in enhancing customer service experiences.

How can productOps help you tackle your hardest problem?