Building a RAG AI Chatbot: Unleashing the Power of Your Data

Greg Dolder

Head of Engineering • March 12, 2024

Understanding RAG AI Chatbots

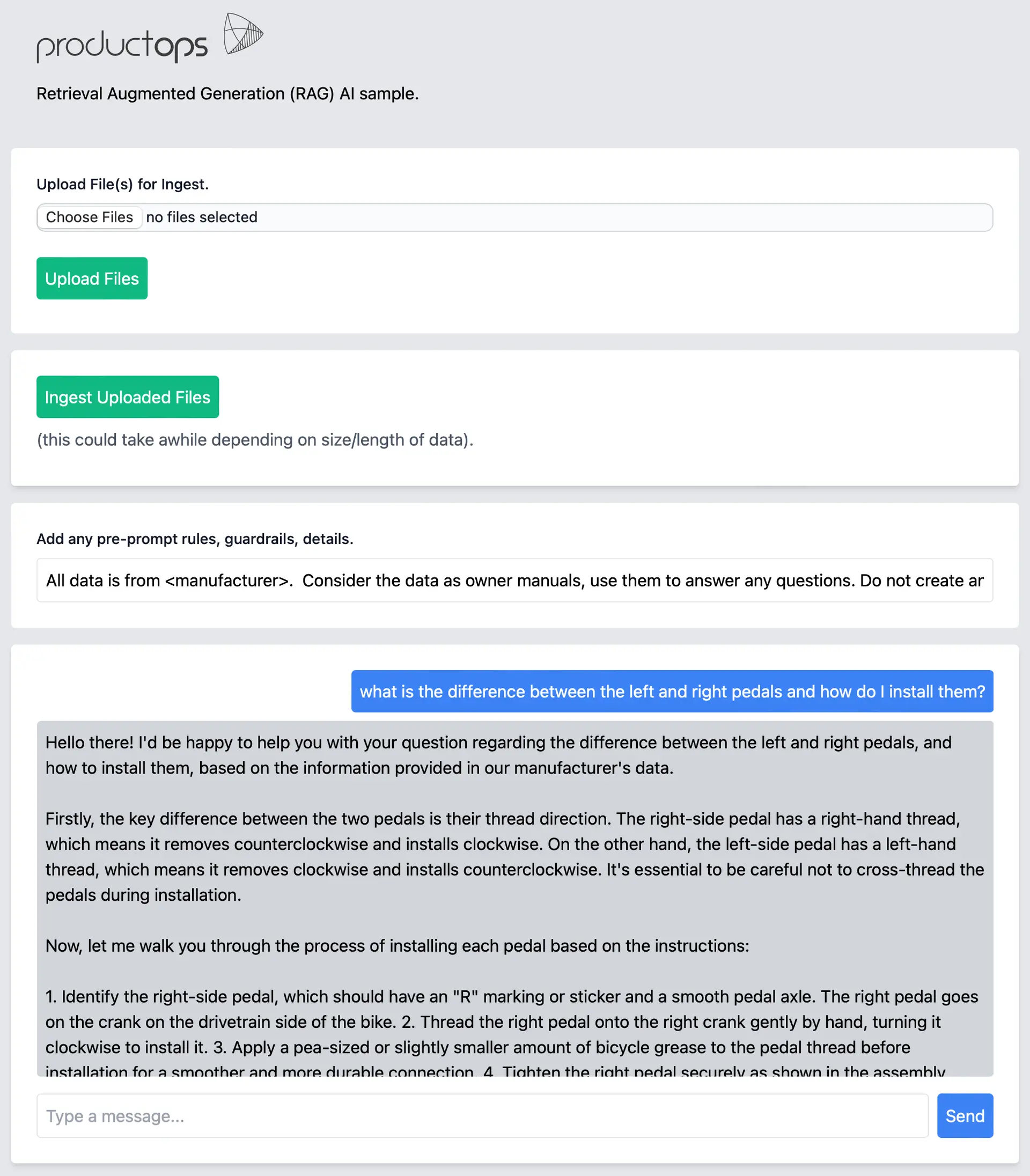

Retrieval-Augmented Generation (RAG) represents a significant advancement in AI chatbot technology, combining the power of large language models with precise information retrieval. Unlike traditional chatbots that rely solely on pre-trained knowledge, RAG systems can dynamically access and incorporate specific information from your organization's knowledge base, ensuring responses are both accurate and contextually relevant.

The real power of RAG lies in its ability to bridge the gap between general language understanding and domain-specific knowledge. When a user poses a question, the system doesn't just generate a response based on its training data—it actively searches through your documented knowledge base, finds relevant information, and uses that to inform its response. This approach significantly reduces hallucination and ensures that responses align with your organization's specific context and requirements.

Key Components of a RAG System

A production-ready RAG system consists of three essential components, each playing a crucial role in delivering accurate and contextual responses:

- Document Processing Pipeline: This component handles the ingestion, cleaning, and preparation of your knowledge base. It includes sophisticated text extraction tools, metadata management, and content validation mechanisms to ensure high-quality input data.

- Vector Database: At the heart of the system lies a specialized database designed for storing and retrieving high-dimensional vector embeddings. This component must be optimized for both speed and accuracy, capable of handling millions of embeddings while maintaining sub-second query times.

- LLM Integration Layer: This layer orchestrates the interaction between retrieved context and the language model, managing prompt engineering, context window optimization, and response generation while maintaining conversation coherence.

Document Processing and Embedding Generation

The foundation of an effective RAG system lies in sophisticated document processing. This critical phase involves several key steps and considerations:

- Text Extraction and Cleaning: Implementing robust pipelines for handling various document formats (PDF, HTML, DOCX), removing irrelevant content, and standardizing text formatting. This includes handling special characters, maintaining document structure, and preserving important metadata.

- Intelligent Chunking: Developing adaptive chunking strategies that maintain semantic coherence while optimizing for context window limitations. This might involve paragraph-based splitting, sliding windows, or recursive chunking based on content structure.

- Embedding Generation: Utilizing state-of-the-art embedding models to convert text chunks into dense vector representations. This process needs to balance computational efficiency with embedding quality, often requiring careful model selection and optimization.

Vector Database Implementation

Selecting and implementing the right vector database is crucial for RAG performance. Modern solutions offer various tradeoffs that need careful consideration:

- Scalability: The ability to handle growing datasets efficiently, supporting both horizontal and vertical scaling strategies. This includes considerations for index building, query performance, and resource utilization.

- Search Algorithms: Implementation of approximate nearest neighbor (ANN) algorithms like HNSW or IVF, with tunable parameters for balancing search speed and accuracy.

- Metadata Filtering: Support for complex filtering operations during vector search, allowing for refined context retrieval based on document properties, timestamps, or custom attributes.

Integration with Large Language Models

The final and most crucial step involves creating a seamless integration between your retrieval system and the chosen LLM. This integration requires careful consideration of several factors:

- Context Window Management: Implementing smart strategies for fitting retrieved content within the LLM's context window, including prioritization algorithms and content summarization when necessary.

- Prompt Engineering: Developing robust prompt templates that effectively combine user queries with retrieved context, ensuring consistent and high-quality responses.

- Response Generation: Implementing mechanisms for response validation, fact-checking against retrieved context, and maintaining conversation history for coherent multi-turn interactions.

Best Practices and Optimization

To ensure your RAG chatbot performs optimally in a production environment, consider these essential practices:

- Error Handling: Implement comprehensive error handling and fallback mechanisms at each layer of the system, ensuring graceful degradation when components fail.

- Performance Monitoring: Set up detailed monitoring and logging for each component, tracking metrics like latency, accuracy, and resource utilization.

- Continuous Improvement: Establish feedback loops for collecting user interactions and response quality metrics, using this data to fine-tune the system over time.

- Security Considerations: Implement proper authentication, rate limiting, and content filtering to protect both the system and its users.

Conclusion

Building a production-ready RAG AI chatbot requires careful attention to each component's implementation and integration. The success of such a system depends not just on the individual components but on how well they work together to deliver accurate, relevant, and timely responses. By following these architectural principles and implementation guidelines, you can create a robust system that effectively leverages both the power of large language models and your organization's specific knowledge base.